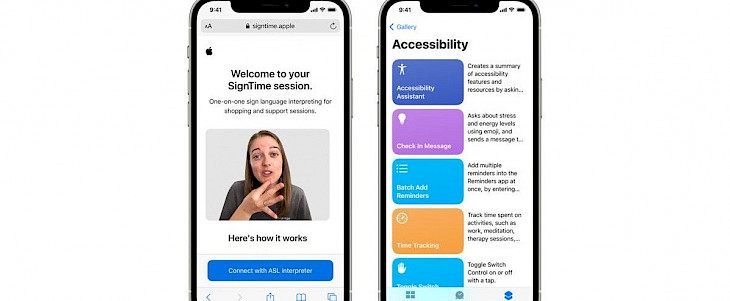

Apple today unveiled new app features aimed at people with mobility, vision, hearing, and cognitive impairments. These next-generation innovations demonstrate Apple's belief that accessibility is a human right, and they build on the company's long history of providing industry-leading features that enable all users to customize Apple products.

People with limb dissimilarities will be able to access Apple Watch using Assistive Touch later this year, thanks to software upgrades in all of Apple's operating systems. For easier access, the iPad will also support third-party eye-tracking hardware. Apple's industry-leading VoiceOver screen reader would get even smarter, using on-device intelligence to explore objects inside photos.

It is adding new background sounds to help reduce disturbances in support of neurodiversity, and Made for iPhone (MFi) would soon support new bi-directional hearing aids for those who are deaf or hard of hearing.

Customers of the Apple Store and Apple Support will soon be able to use a new accessibility program called SignTime. The service will use sign language to link customers with AppleCare and Retail Customer Care teams. SignTime will be available from May 20.

SignTime will be available in three languages: American Sign Language (ASL) in the US, British Sign Language (BSL) in the UK, and French Sign Language (LSF) in France, according to Apple. Sessions are formed by the use of a web browser.

Many Apple Stores now provide free sign language interpreter appointments in advance of your visit in many countries.

Apple introduced several new accessibility features, including SignTime. AssistiveTouch for Apple Watch, Background Sounds, and other features will be available in the future. On May 20, Apple Stores will host virtual Getting Started sessions in American Sign Language and British Sign Language to commemorate Global Accessibility Awareness Day.

This innovative new usability feature will enable Apple Watch users with restricted mobility. Users with upper body limb differences can use AssistiveTouch for watchOS to experience the benefits of the Apple Watch without ever touching the monitor or commands.

Apple Watch can detect subtle dissimilarities in muscle movement and tendon activity using built-in motion sensors like the gyroscope and accelerometer, the optical heart rate sensor, and on-device machine learning. This allows users to navigate a cursor on display using a series of hand gestures like pinching or clenching.

Customers with limb differences can use AssistiveTouch on Apple Watch to conveniently answer incoming calls, monitor an onscreen motion pointer, access Notification Center, Control Center, and other features.

Eye-Tracking Support for iPad

iPadOS can support third-party eye-tracking systems, allowing users to monitor the iPad with their eyes alone. Compatible MFi devices will monitor where a person is looking onscreen later this year, and the pointer will shift to meet the person's focus, while prolonged eye contact acts, similar to touch, later this year.

Explore images with Voiceover

VoiceOver, Apple's industry-leading screen reader for blind and low vision people, is getting new features. Users can now discover even more information about the individuals, text, table data, and other artifacts inside images, thanks to recent changes that introduced Image Descriptions to VoiceOver.

Users can navigate a receipt picture like a table, row by row and column by column, with table headers. VoiceOver may also identify a person's location inside a picture and other things, allowing users to relive memories in greater detail, and Markup allows users to add their own image descriptions to personalize family pictures.