Apple is under a barrage of criticism from privacy activists and detractors alike, who claim the kid safety standards(CSAM feature) raise a number of warning flags. While part of the backlash may be attributed to disinformation resulting from a fundamental misunderstanding of Apple's CSAM technology, others raise real concerns about mission creep and privacy breaches that Apple did not address at the outset.

Recall Apple initiative CSAM

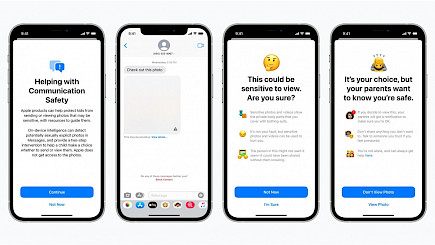

Apple was going to release three new kid safety features that were created in conjunction with child safety specialists. To begin with, new communication tools will allow parents to play a more informed role in assisting their children in navigating online communication. The Messages app will utilize on-device machine learning to alert users about potentially harmful material while keeping private messages hidden from Apple.

Next, iOS and iPadOS will employ new cryptographic apps to help prevent the spread of CSAM online while maintaining user privacy. Apple will be able to offer vital information to law enforcement about CSAM collections in iCloud Photos.

CSAM will be able to recognize photographs saved in iCloud Photos that portray sexually explicit behaviors involving children as part of the iOS 15 and iPadOS 15 upgrades later this year.

Apple's approach of identifying known CSAM is "built with user privacy in mind," according to the company. To detect child abuse photographs, the business stated it does not directly view customers' photos and instead uses a device-local, hash-based matching algorithm.

To read our article related to Apple CSAM tools. Click "here"...

What’s the new update?

Following backlash from critics, Apple says it will delay the release of Child Sexual Abuse Material (CSAM) detection tools "to make improvements." One of the features is a check for known CSAM in iCloud Photos, which has raised concerns among privacy activists.

Employees have written more than 800 messages to a Slack channel on the issue that has been active for days since the announcement of Apple's CSAM measures, according to the article. Those who are concerned about the planned deployment raise concerns about possible government abuse, a speculative possibility that Apple dismissed in a new support document and public remarks this week.

The resistance within Apple appears to be coming from employees who aren't part of the company's core security and privacy teams. According to Reuters sources, those working in the security sector did not appear to be "big complainants" in the posts, and several backed Apple's position by claiming that the new mechanisms are an acceptable reaction to CSAM.

Some staff has protested the criticism in a thread related to the planned photo "scanning" function (the tool checks image hashes against a hashed database of known CSAM), while others believe Slack is not the place for such conversations, according to the article. Some workers believe the on-device technologies will usher in full end-to-end iCloud encryption.