Apple's latest iPhone update is a "shocking" change in direction for a billion iMessage customers.

As the new versions of iMessage will be available for future iOS it is most probably going to completely change the traditional methods of texting by revamping the security of the app to a great extent.

This new update of iMessage will not only affect apps like Messenger and WhatsApp but also iPhone users who use multiple messengers at a time.

Here’s more about it:

The Big News:

Even though Facebook is a data-hungry company, WhatsApp users can use security to trust the app. WhatsApp does not have the ability to monitor your content, but metadata about it is still available for inspection.

iMessage, Signal, secret conversations on Facebook Messenger, Telegram, and Google's latest end-to-end encrypted Android Messages updates are all the same. This level of security has fueled the heated debate between lawmakers and technology platforms over who can access that content.

Apple's iOS 15.2 beta is set to change everything. While its child safety plans are right to protect minors, they wrongly use AI to detect explicit images sent or received by users. This will be the first time that any type of monitoring has been implemented on a major encrypted messenger.

EFF said that Apple's update "whatever it calls it" was a warning to users. "It's no more secure messaging... Its compromise of end-to-end encryption is a shocking change-face for users who have relied on the company’s leadership in privacy, security, and privacy."

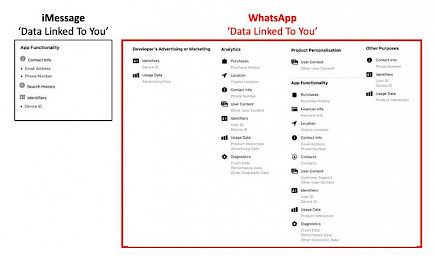

iMessage is a weird hybrid in terms of security. It has the best encryption architecture in the industry, seamless multi-device access, and rolling backups. However, it also stores encrypted keys in unencrypted iCloud back-ups.

Worse, if an iMessage user sends outside Apple's walls, it reverts to SMS, which is a technology with poor security. iMessage is no longer a platform that we recommend.

WhatsApp is my preferred daily messenger. It has the right mix of security and scale. The volume of encrypted messages it sends is likely to exceed that of all the other messengers. It has also publicly proclaimed the importance of encryption security. It also stands to lose the most if there is a flaw in this security.

Apple's iMessage update needs an adult to enable it for children within the same family group. Apple originally planned to notify over-13s that they were receiving or sending explicit content, but would also notify parents if the warnings were ignored by under-13s who viewed imagery without permission. Apple has since revised its plans, and the beta version of iOS 1.2 does not go beyond the warning on the device for minors.

Apple claims iMessage doesn't breach end-to-end encryption.

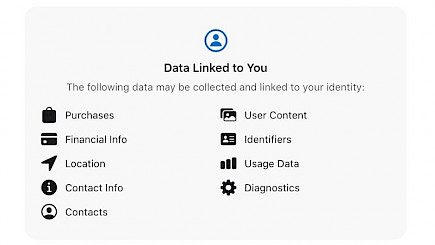

Apple claims that the update to iMessage does not affect its end-to-end encryption. This is technically correct. It does this in practice. Pegasus works by compromising the endpoint of an encrypted messenger that is end-to-end encrypted. This breaks the secure encryption and allows for an on-device compromise.

As we have said, the core issue is accountability and reporting obligations. This is Big Tech's Achilles' heel when it comes to encryption defense. Legislators continue to explore ways to shift responsibility for policing content onto platforms rather than insist that certain encryption backdoors be used.

This was evident with the U.S.'s EARN IT Act last year, which was designed to allow security agencies to enforce encryption. Although the outcome was diluted by the public backlash against governments hacking into encrypted messaging platforms and their governments, there is still pressure to deliver.

Apple's proposed iMessage upgrade is a gift for security hawks who are pushing for such changes. Apple says it can use device-side AI for content classification and warn users if it is detected. Apple claims it can do all of this without compromising end-to-end encryption. Apple claims it can achieve what lawmakers want--a best-of-both-worlds solution that just needs a few more classifiers and a reporting function.

It is easy to defend encryption when there are no compromises. But it is quite different to defend the inability of a platform (client or server-side), to report that a minor is being placed in danger.

WhatsApp provides a detailed explanation

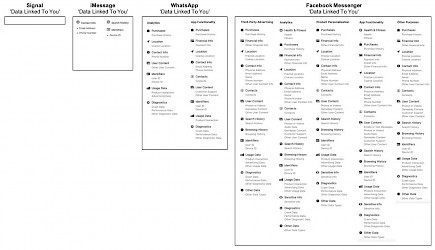

WhatsApp is now part of the Facebook/Meta empire, which already reports large amounts of child-harm imagery and other content. Facebook and Messenger can scan content for known abuse imagery.

WhatsApp, however, relies on metadata and other public-facing content, such as profile information and group names.

WhatsApp provides a detailed explanation of how it addresses child endangerment via its platform. It mines unencrypted metadata to identify patterns that it can flag.

This monitoring only allows access to unencrypted content. Users can report messages, but other than that, WhatsApp pulls encrypted content from WhatsApp and sends it back to moderators. It is manual and requires the recipient to take proactive steps.

WhatsApp does not refer to unencrypted content. It doesn't contain client-side data that isn't yet encrypted to be sent to recipients. This is Apple's semantics. WhatsApp knows that users view the information contained within its app as being protected by its end-to-end encryption. This is even though it may have been technically decrypted or not yet encrypted.

Apple's implicit definition of encryption would mean that all WhatsApp client-side content, as well as iMessage's, would be outside of its boundaries. This is dangerous territory. It argues that endpoints are fair game, but not at the expense of critical security. Apple can't have it both ways. Either it's end-to-end encrypted, or it's not.

Apple argues that it remains encrypted from the beginning because it can't see any content. This argument was strengthened by its decision not to include the report to parents function in its original plans.

This argument is again thrown out the window because Apple has an externally-crafted monitoring function within its app. This will result in a reporting obligation if the platform knows that something is seriously wrong.

WhatsApp claims that it scans all content and bans 300,000 accounts for suspected CEI sharing. However, the critical issue is that it could monitor the content that has not been encrypted.

Apple now poses a difficult question for WhatsApp. WhatsApp is capable of creating and introducing its app classifiers to detect harmful content. The concept of reporting obligations is fundamentally changing. Today, it is argued that content monitoring is not possible on these platforms. However, what is currently black and white is about to turn very grey.

All of these points do not negate the importance of doing more to protect minors. WhatsApp points out its in-app reporting feature, which does not compromise its security. The iMessage update is not yet confirmed. iMessage could start with a similar reporting function.

Social media platforms should not allow fully encrypted messaging. WhatsApp states that you cannot search for people who are not your friends on WhatsApp. You'll need their phone number to contact them. This is very different from Facebook, Instagram, and other social media platforms, which include messaging. Facebook/Meta should not encrypt any other messages than WhatsApp, as it has already stated. It should also explore AI monitoring to stop the abuse.

iMessage, however, is not a social media platform like WhatsApp. It can't be accessed by people contacting it. It is impossible to pretend that no line has been crossed. EFF is correct. If you look beyond the debate around encrypted transportation layers vs. endpoints, there's a binary.

Winding-up

It's been some time that Apple has been trying day in and day out to throw out foreign companies from its domain. From Intel processors to Meta Messengers they seem to hate any and every one of them.

So, it might now be a shock if these apps are completely eradicated from Apple’s device environment in the coming years.