This year, Apple has undergone a lot, but there is another controversy that has come to light. Apple hasn't been up to its mark but has failed to provide security for their privacy. There have been many bugs and controversies to its name this year. Recently, it has added a new controversy to its name. Wondering what it is? Follow the article to the end to find out more about the news. Without any delay, let's get started with today's topic.

Even though iPhone users have had to endure a lot over the past few months, the company's new CSAM detector system has been a source of much controversy. A shocking new report may just convince you to ditch your iPhone.

The concern

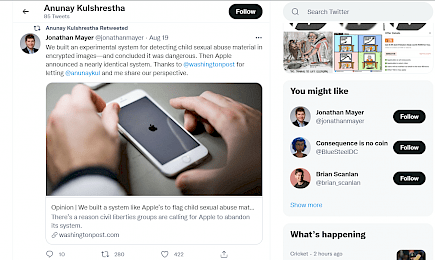

A couple of researchers who spent two years building a CSAM (child sexual abuse material) detection system, identical to the one Apple wants to put on users' iPhones, iPads, and Macs next month have issued an unequivocal warning in a recent editorial published by The Washington Post that it's "dangerous".

"We wrote the only peer-reviewed publication on how to build a system like Apple's--and we concluded the technology was dangerous". Anunay Kulshrestha and State Jonathan Mayer and the two Princeton academics behind the research. This system could easily be repurposed to spy on and censor. The design was not restricted to any particular content type; the service could swap any content-matching databases without warning the person using it.

This feature is the primary concern regarding Apple's CSAM Initiative. While the technology is intended to reduce child abuse, hackers and governments can manipulate the system to search for your iCloud photos to report abusive content. Thus, making this feature an evident concern.

"Apple's second-largest market, with hundreds of millions of devices, is China. So what's stopping the Chinese government from requesting that Apple scan such devices for pro-democracy content? Ask the researchers."

Critics have plenty to say about this. Earlier this year, Apple was accused of compromising on surveillance and censorship in China after they agreed to move the personal data of its Chinese customers to the servers of a state-owned Chinese firm. Apple also stated about providing customer data to the US government almost 4,000 times last year! Kulshrestha and Mayer explain that they also found other flaws. "The content-matching system could produce false positives, and malicious users could use it to expose innocent users to scrutiny."

Recent history is not encouraging. The Pegasus operation was unveiled last month as a worldwide company that has been successfully hacking iPhones for years and selling its technology to other agencies to monitor anti-regime activists, journalists, and political figures from competitor countries. This could be a step further, as Apple technology can scan and flag iCloud photos belonging to a billion iPhone users.

Over 90 human rights organizations from across the world had previously written to Apple. Stating that the technology underpinning CSAM "will have laid the foundation for censorship, surveillance, and persecution on a global level" before Mayer and Kulshrestha spoke out.

Apple has now defended its CSAM system by saying it was poorly explained and a "recipe for this kind of confusion," but Mayer and Kulshrestha were unimpressed. Apple's motivation was, like ours, to protect children. They also said that Apple's system was more technologically efficient and capable than ours. "But, we were baffled when we saw that Apple didn't have any answers to the difficult questions we had raised."

Apple is now in an unsustainable mess. For several years, Apple has put considerable effort into marketing itself as the champion of user privacy and trying to earn the tag of "the privacy keeper, no matter what". In fact, the company's official privacy page also revolves around fundal human rights and whatnot! Here is a summary of the same:

“The privacy page starts by stating that privacy is a fundamental human right as stated by Apple privacy page. At Apple, it is also one of their core values. Your devices are important for so many parts of your life and what you share from those experiences, and who you share them with, should be up to the users only. Apple has designed products to protect your privacy and give you absolute control over all your actions done on their device as well as your information. It's not always easy. But that's the kind of innovation Apple believes in”.

Our take on this?

Apple has not been so great this year. There are numerous controversies and bugs in Apple products. Many incidents show that Apple products are not as credible as they once were. Apple is known for its seriousness and primary focus on security and privacy, but this year both were invaded. We hadn't expected this from such a big firm: the Wifi bug, the Automatic brightness bug, and the list goes on!

But, in the end, it all goes down to how Apple is going to propose their CSAM feature. It may happen that Apple has miraculously found a way to make the feature safe or, it could go otherwise. All we can do for now is wait and watch what Apple has to say.

Should you be optimistic or pessimistic? Well, keep a positive and open mind. Because this CSAM feature might change the way how Apple always preaches about privacy.

Hope you liked the article. Be sure to share your views about this phenomenon and most importantly don’t forget to come back for more great content. Good Day!