In recent times Apple has left no stones unturned to provide a better and more secure platform for all its users. With the advent of the new iOS 15 and iPhone 13, Apple is planning to push its boundaries and ensure that the data of every user is kept private.

In a public statement last month, Apple spoke about its new “Child Safety Protocol”. This new technology is going to make sure that parents have complete authority and control of the online presence of their wards. On and off the web. This brand new initiative by Apple pledges to protect young tech users from the harmful/ brutal contents of the Internet and prohibit the growth and spread of CSAM.

What is CSAM?

Child Sexual Abuse Material(CSAM) defines all the negative audio-visual content that hovers around the internet that uses small children as the basic source of content. Some well-known forms of CSAM include:

- Child Pornography.

- Child molestation videos.

- Other forms of brutal content, whether written or Audio Visual.

Over the years, there is been a serious growth in child pornography on the web. The children involved in such videos are either homeless or abandoned. Who are forced to be a part of such hideous activities so that they can earn a living and feed themselves. As per the codes of the International judiciary committee of UNICEF, any children below the age of 15 should not be a part of a professional workforce. Any person/ organization who is involved in such activity can be charged for Child labor.

Why has there been a growth of CSAM?

CSAM has existed since the early days when the internet became global. But back then it was very securely hidden on the web. With the growth of technology, people came to know about platforms like the Dark web and the Deep web. These platforms were extremely secure as no one can track anyone’s online activities here. Thus, people began selling CSAM in this less-known part of the web.

Another major reason for the growth of CSAM is the growth of filthy mindsets among individuals, especially teenagers. Over the years youngsters have become more and more curious about CSAM content and they are ready to do anything to land such content on their hands.

Such CSAM content can:

- Increase the rate of crime and other illegal activity related to children all over the world.

- Increase in the leak of sensitive information about users and their wards

So, it is extremely necessary to prohibit any CSAM content to reach parents and their children.

Steps taken by Apple

According to trusted sources, Apple is going to use a new Cryptographic modulation on their recent software that will detect CSAM content and prevent them from reaching any user. This new Crypto modulation will also help Apple to learn more about the sources of CSAM content so that legal actions can be taken against people who are promoting such activities.

Some methods taken by Apple are as follows:

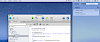

1. Communication safety protocol - iMessage

The iMessage app is going to be heavily tweaked so it can warn the user(child) anytime CSAM content is received. The content will be blurred by the AI of the system and an instant message will be sent to the parents for approval.

This feature will be available on:

- iOS 15 and later versions

- iCloud Plus

- iPadOS 15 and later versions

- macOS Monterey and later versions.

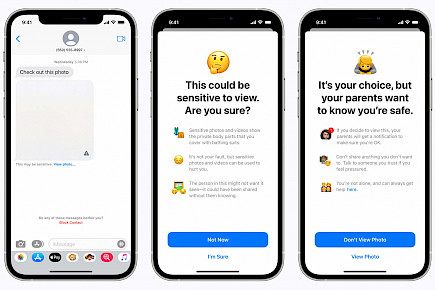

2. CSAM Detector

The integrated AI in this system software will catch any content which falls under the category of CSAM as prescribed by the National Centre for Missing and Exploited Children(NCME).

Each explicit content will be banned from popping up in the system from other sources. These banned content will later be stored in secure servers of iCloud Plus from where they will be sent directly to NSME so they can take legal action against the senders.

3. Voice Search optimization

The voice assistant of Apple - Siri, will be heavily optimized so it can prevent an underage user from searching sexually explicit content on the web. If a child or parent suddenly comes across any CSAM while streaming the web, they will now be able to directly ask Siri to file a report against the content and its source.

This update will be available on:

- iOS 15 and later versions

- iCloud Plus

- iPadOS 15 and later versions

- macOS Monterey and later versions.